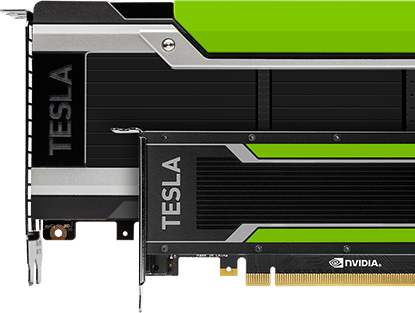

With an eye on bolstering its efforts in the domain of artificial intelligence/Neural Network inferencing, chip giant NVIDIA has announced their latest Pascal-based Tesla P40 and Tesla P4 GPU accelerators.

The new cards will be up to 45-times faster than CPUs, and somewhere around four-times faster than the previous generation GPUs.

Accompanying the new GPU accelerators will come an array of powerful software tools designed specifically to deliver a notable surge in overall efficiency.

NVIDIA has made it clear that it intends to devote a considerable part of its resources in strengthening the company’s existing platform for deep learning. As of today, the platform is divided between Training and Inferencing GPUs.

The P40 and P4 are exclusively designed for inferencing that uses trained deep neural networks to identify images, texts, or speech in response to queries from people and devices.

“Based on the Pascal architecture, these GPUs feature specialized inference instructions based on 8-bit (INT8) operations, delivering 45x faster response than CPUs1 and a 4x improvement over GPU solutions launched less than a year ago,” reads a NVIDIA description on the new P0 and P4.

The new accelerators will be replacing Tesla M40 and Tesla M4 when they finally arrive. Both P40 and P4 come with DeepStream SDK and TensorRT support.

“With the Tesla P100 and now Tesla P4 and P40, NVIDIA offers the only end-to-end deep learning platform for the data center, unlocking the enormous power of AI for a broad range of industries,” said Ian Buck, general manager of accelerated computing at NVIDIA.

“They slash training time from days to hours. They enable insight to be extracted instantly. And they produce real-time responses for consumers from AI-powered services.”