Elon Musk led Tesla Inc. is one of the most successful startups in the world. The $51 Billion valued Tesla Inc. is now the most valuable automobile maker in the USA, leaving behind even the good old General Motors by $1 Billion in market valuation. The company is mostly thriving on huge demands of its electronic powered cars. Elon Musk last year announced in an event his intent to launch fully automatic cars, but due to legal hurdles related to acceptability of autopilot Tesla’s Autopilot has been dropped in favor of semi-autonomous cars.

The concerns regarding the autopilot in Tesla got support from a recent accident in Florida where a man was killed in a crash with a tractor-trailer. Now an independent researcher has claimed that Tesla’s Autopilot may prove fatal to on-road cyclists. Heather Knight an expert in Human-Robot Interface with a Ph.D. from Carnegie Mellon University has shared her concern over the capacity of Tesla’s Autopilot. She took to the micro-blogging website Twitter to share her review of Tesla’s Autopilot.

I just published “Tesla Autopilot Review: Bikers will die” https://t.co/h0QOY8vAV8

— Heather Knight (@heatherknight) May 27, 2017

Her review of Tesla’s Self-Driving Technology was published on Medium, where she describes that to check the potential of Tesla’s Self-Driving she and her friend took one of Tesla’s car for a drive. Their intention was to check whether Tesla Cars offered the same level of human to robotic interactions as is expected from machines of such caliber.

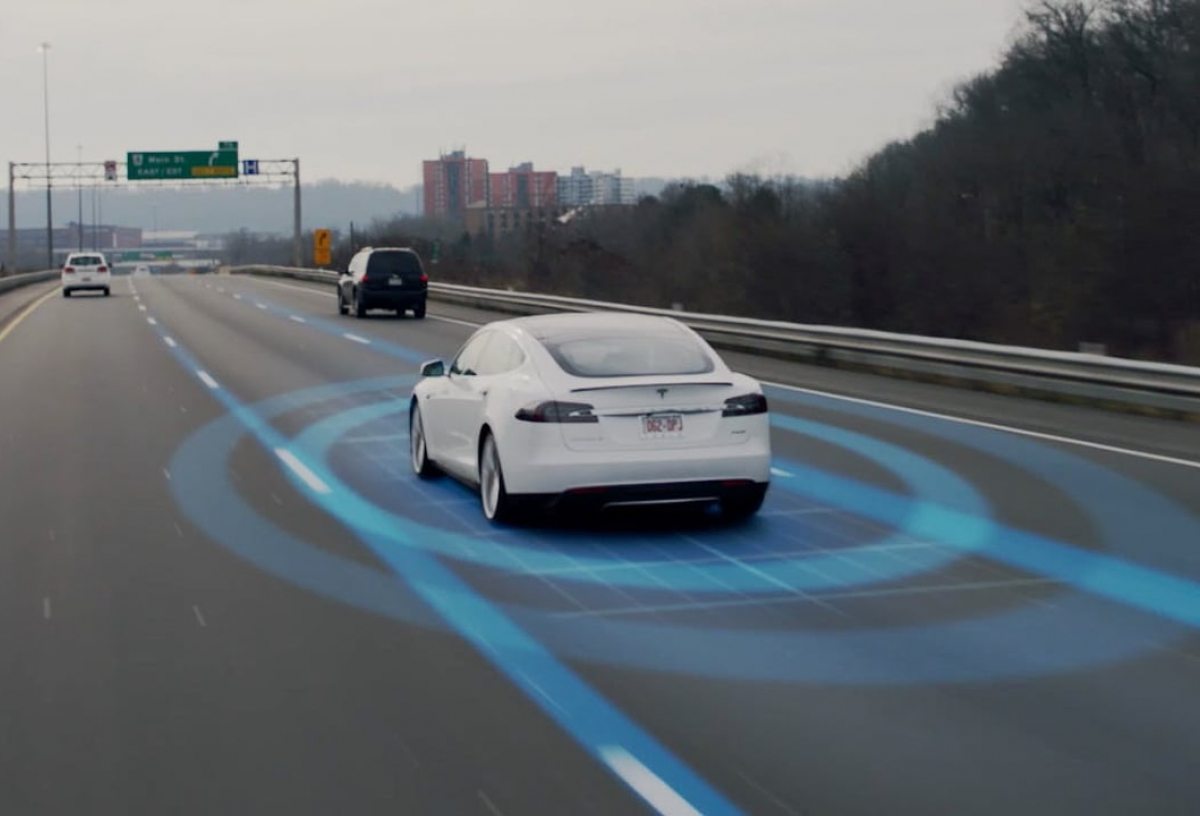

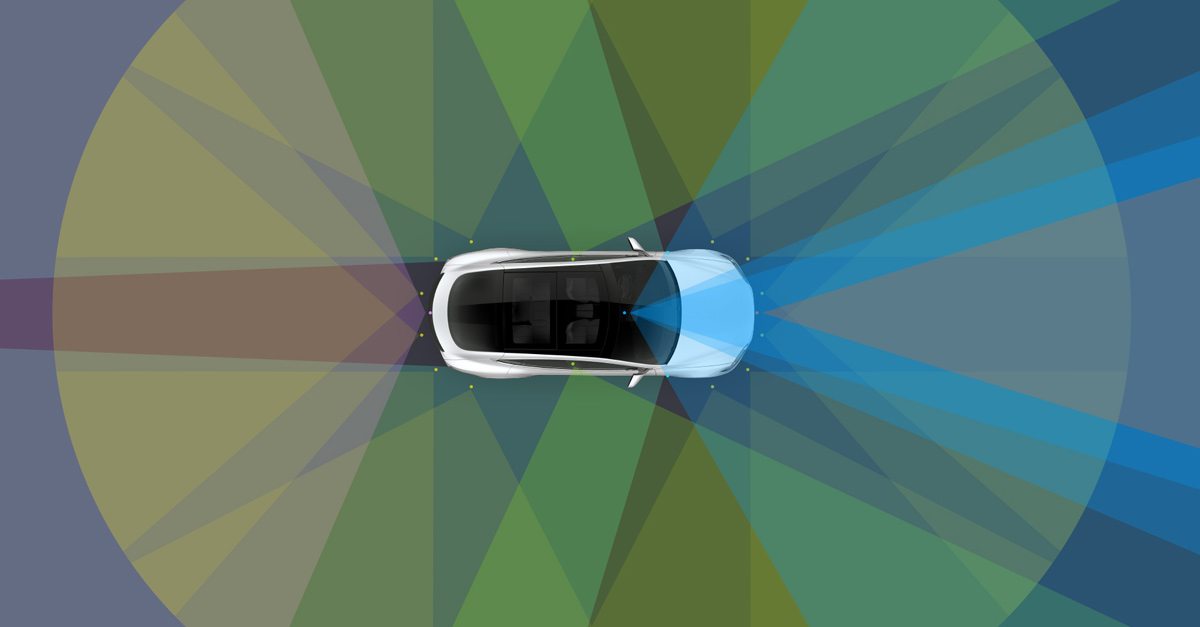

During her test drive with a Tesla car, Heather found that the autopilot fared well for the most part throughout the streets and highways around her city. But when a cycle came near the car she observed: “agnostic behavior around bicyclists to be frightening.” When she observed the “Situation Awareness Display” which is fitted inside the car and shows the human passengers what the autopilot sees and senses while driving, the results were accurate for other cars but was grossly inaccurate in sensing cycles on the road.

In her review of Tesla’s Autopilot, Heather Knight describes the anomaly with identifying Bicycle in these words: ” I’d estimate that Autopilot classified ~30% of other cars, and 1% of bicyclists. Not being able to classify objects doesn’t mean the tesla doesn’t see that something is there, but given the lives at stake, we recommend that people NEVER USE TESLA AUTOPILOT AROUND BICYCLISTS! this grade is not for the detection system, it’s for exposing the car’s limitations. a feature telling the human to take over is incredibly important.”

In Tesla’s defense, Tesla has already warned that its cars aren’t fully automatic. The driver should always keep his hands on steering and his mind attentive to the surrounding. Also, there is a safety feature in Autopilot mode where if the driver has left the steering for a prolonged time then the Tesla’s autopilot automatically disengages itself and won’t start unless the car is stopped and then restarted.

However, according to a story published in Wall Street Journal, a Tesla user reported that his Tesla car was responsive enough to prevent an accident from happening when his Tesla’s Autopilot stopped the car before a negligent cyclist could crash into its hood.

Despite the limitations of Autopilot in recognizing cyclists, Heather gave it an A+ because according to her, “it helps the driver understand shortcomings of the car, i.e., its perception sucks.”

Tesla Has not yet confirmed the presence of alleged anomaly in its semi-autonomous driving technology, we are yet to hear their side of the story. In the meanwhile, the threads on Heather’s post are getting long by each passing day. She has even tried to calm down some Tesla lovers with her study. However, if her allegations are true then we would like Tesla to intervene in this matter because the all round success of Tesla’s Autopilot technology is crucial to further innovation and social acceptability of Artificial Intelligence and similar technologies.