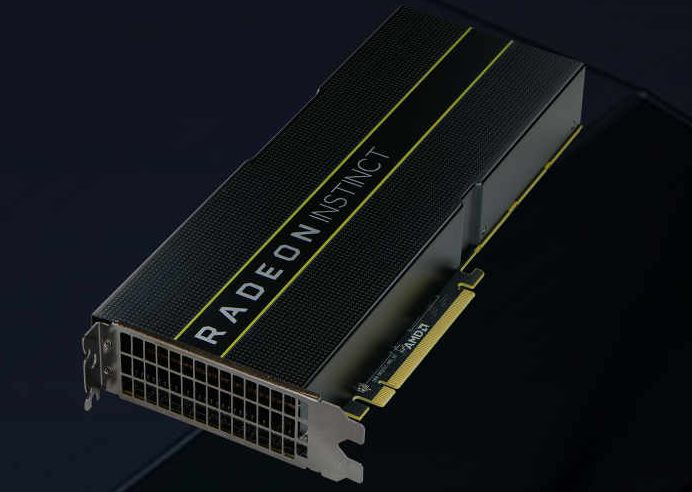

AMD officially unveiled the new Radeon Instinct family accelerators for deep learning earlier this week. The new lineup includes the Radeon Instinct MI25 that comes equipped with 16GB of HBM2 memory powered by a Vega 10 GPU along with 4096 Stream cores and a 300W TDP.

The Vega based Instinct MI25 is designed for large-scale machine intelligence and deep learning data center applications. The mighty graphics card makes use of several new cutting-edge Radeon technologies in order to ensure optimal performance and deliver much higher compute throughput in AI learning operations.

Overall, there will be three new graphics cards, each from the Radeon Instinct lineup. The other two cards include the Fiji XT based Radeon Instinct MI8 and the Polaris 10 powered Radeon Instinct MI6. The MI in the moniker is the acronym for Machine Intelligence, whereas the corresponding numbers stand for the total half-precision compute output of the card itself.

The AMD Radeon Instinct MI25 is the fastest among all cards in the Instinct lineup.

AMD Radeon Instinct MI25 Deep Learning Accelerator Specs:

- Vega 10 Architecture

- 4096 Stream Processors

- 24.6 TFLOPS Half Precision (FP16)

- 12.3 TFLOPS Single Precision (FP32)

- 768 GFLOPS Double Precision (FP64)

- 16GB HBM2 Memory

- 484GB/sec Memory Bandwidth

- 300W TDP

- PCIe Form Factor

- Full Height Dual Slot

- Passive Cooling

AMD Radeon Instinct MI8 Deep Learning Accelerator Specs:

- 8.2 TFLOPS FP16 or FP32 Performance

- Up To 47 GFLOPS Per Watt FP16 or FP32 Performance

- 4GB HBM1 on 512-bit Memory Interface

- Passively Cooled Server Accelerator

- Large BAR Support for Multi GPU Peer to Peer

- ROCm Open Platform for HPC-Class Rack Scale

- Optimized MIOpen Libraries for Deep Learning

- MxGPU SR-IOV Hardware Virtualization

AMD Radeon Instinct MI6 Deep Learning Accelerator Specs:

- 5.7 TFLOPS FP16 or FP32 Performance

- Up To 38 GFLOPS Per Watt Peak FP16 or FP32 Performance

- 16GB Ultra-Fast GDDR5 Memory on 256-bit Memory Interface

- Passively Cooled Server Accelerator

- Large BAR Support for Multi-GPU Peer to Peer

- ROCm Open Platform for HPC-Class Scale Out

- Optimized MIOpen Libraries for Deep Learning

- MxGPU SR-IOV Hardware Virtualization