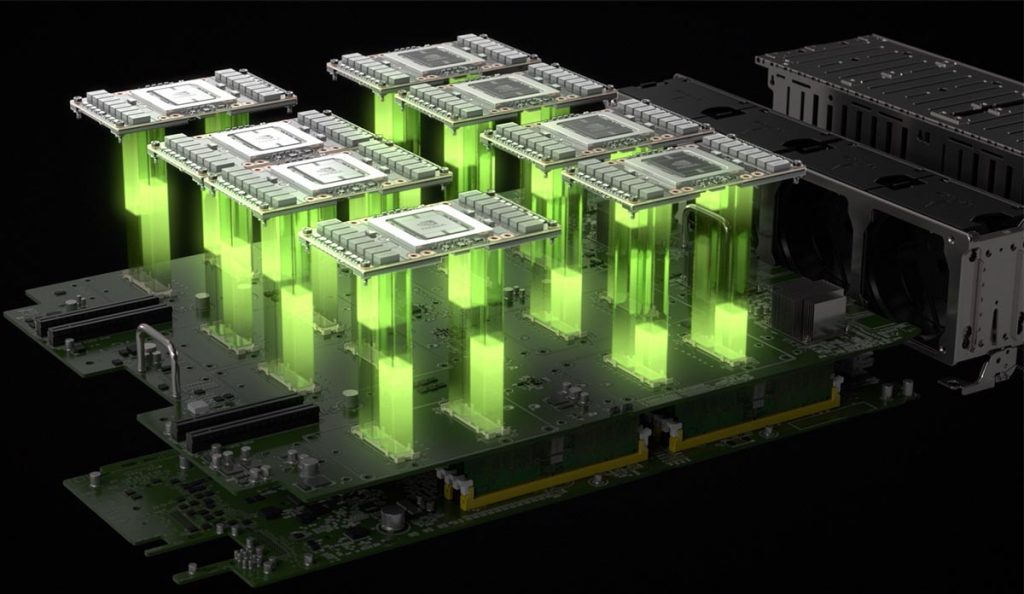

It seems NVIDIA is working on a Multi-Chip-Module, or MCM package designed to feature several chips on the same chip interposer that use fast I/O lanes for interconnectivity. A few examples of an MCM package would be the AMD’s Fiji and Vega GPUs, or NVIDIA’s Pascal P100 and Volta V100 GPUs. While these packages support just one GPUs, they carry several DRAM dies.

There are multiple ways to design an MCM package. However, what’s different with the NVIDIA’s work-in-progress MCM solution is that it is likely to break away from the conventional method of having a few DRAM dies and one monolithic GPU on the package. Instead, it will make use of several smaller iterations of their GPU chips with a much higher amount of DRAM dies.

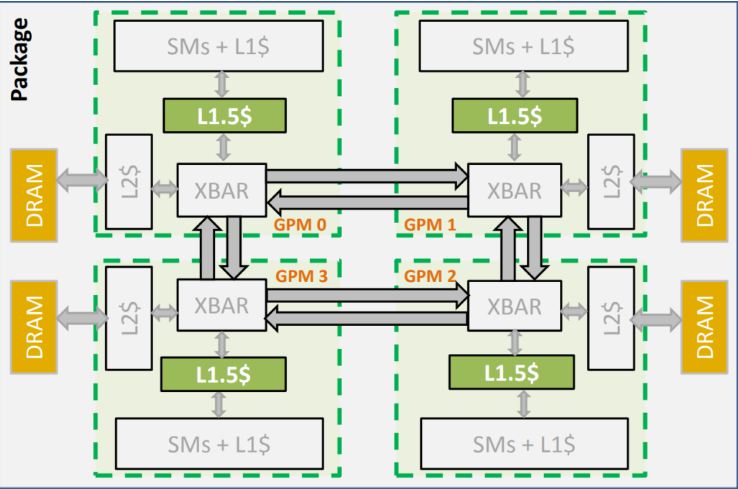

The DRAM dies, as well as the GPU will be connected using an I/O and controller chipset on-die (and not on-chip).

This configuration not only will help with the implementation of GPU Modules which will come in smaller sizes, but they will also be significantly easier to produce and way less expensive than before.

NVIDIA also tried out a simulated performance of a 256 SMs based MCM GPUs, 64 SMs per GPU.

For the uninitiated, the top Volta chip right now sports 84 SMs with 5376 cores. The 256 SM MCM package the company is reportedly working on will feature as many as 16,384 cores.

The performance improvement compared to a baseline MCM-GPU will be a whopping 22.8%. Even if you compare it to the largest possible monolithic GPU in theory, which has the capacity to house 128 SMs on a single GPU, the 256 SM chip still fared noticeably better (with a 4.5% margin).

In addition, it will also flaunt a key architectural upgrade, as well as enhanced interconnects. According to NVIDIA, each GPM will likely be at least 40% to 60% smaller compared to the biggest GPUs of today.

Here’s a very basic example of a GPU MCM: