How interesting would it be to create a computer that can beat any human at StarCraft 2? Well, we already have alphago, which is a similar thing from Google’s Deepmind. And if the computer knows how to make a 210 supply army with a considerable amount of apm then it’s certainly possible. A computer that played perfectly and outplayed humans would need only about 100 apm at max.

Defeating StarCraft 2 is easily the next most important thing for Google DeepMind

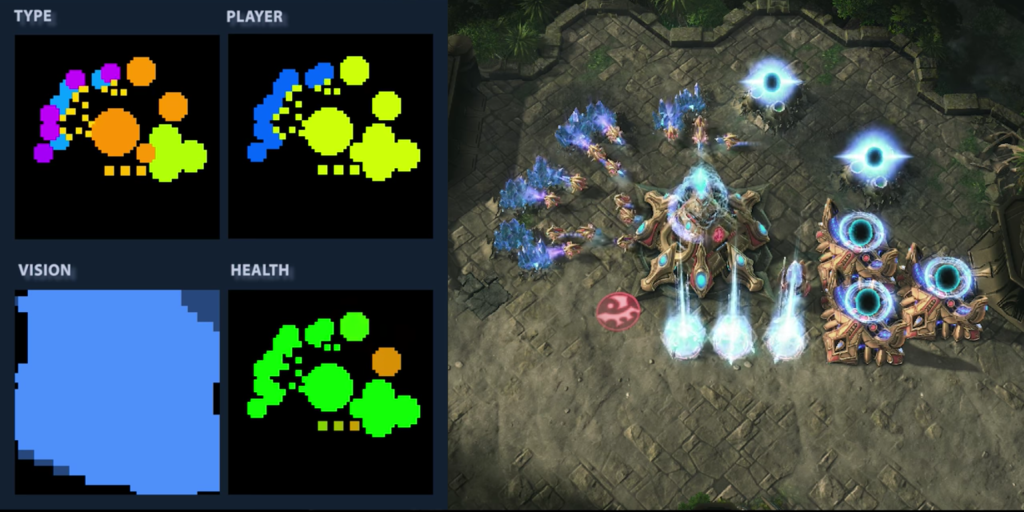

Players would love to play around with this and try to find the best architecture. We’re thinking a convolution layer or two to make sense of the visual input and then several regular hidden layers for decision making. Curious what they’re using right now, there was no info on the page where they mentioned their next endeavor.

Google needs to give it audio cues too, or it’s going lose to “Nuclear Launch Detected”. Plus it should know how to gather information from an opponent & have a limited APM to make it “fair”. It should have limitations else it would be impossible to beat a computer with unlimited actions/second in comparison to human limitations. The human player couldn’t win a fight when the computer can move every unit separate for example splitting marines vs banelings. Which is technically what the current StarCraft 2 AI does.

Visual and mechanical skills required to play the game are the challenge here as they are far more difficult for computers to approach than computationally complex but easy to describe games like chess. Recognizing what’s going on based on a video feed, managing and keeping track of things using a limited APM, dealing with hidden and unknown information, controlling the camera, making assumptions about your opponent’s strategy and so on are immensely difficult for today’s AI algorithms. In that regard, this would be a big step forward.